The Ultimate Guide to Web Scraping with Google Apps Script and Google Sheets

Unlock the power of web data without needing advanced coding skills! This tutorial demonstrates how to effortlessly scrape and extract information from websites directly into Google Sheets using Google Apps Script, opening up a world of possibilities for businesses. Automate market research, competitor analysis, and data collection, saving countless hours of manual work and gaining valuable insights—all without requiring extensive HTML knowledge.

What is Web Scraping?

Web scraping is akin to automatically copying and pasting information from websites. Imagine having a digital assistant that visits websites and automatically collects specified information. Instead of manually selecting and copying text or images, a program performs this task for you, gathering data such as prices, product details, or contact information.

This extracted data can then be used for various purposes, including comparing prices across different online retailers, collecting sales leads, creating notifications for content changes, and more. Essentially, web scraping automates tedious manual work, saving significant time and effort by efficiently extracting and organizing large amounts of web data.

Why Use Web Scraping for Businesses?

In today’s competitive business environment, productivity and optimized resource allocation are crucial for success. Web scraping is a valuable tool for achieving these goals, significantly improving productivity by automating time-consuming data collection and analysis.

By automating the extraction of data from websites, businesses save considerable time and effort compared to manual methods. This increased efficiency allows teams to focus on strategic initiatives rather than repetitive tasks.

Furthermore, the data gathered through web scraping is not merely an end in itself; it serves as crucial input for further automation processes. For instance, scraped data can power dynamic, competition-based pricing strategies in e-commerce or automatically identify valuable investment opportunities by aggregating real estate listings from multiple portals.

This seamless integration with other automation workflows amplifies the impact of web scraping, making it an indispensable asset for any forward-thinking business.

Introducing Google Apps Script and Google Sheets

Google Apps Script is a cloud-based scripting language built on JavaScript that empowers you to automate tasks and build powerful integrations within Google Workspace (formerly G Suite). Its unique advantage lies in its ability to seamlessly connect various Google services, including Gmail, Docs, Drive, Calendar, and, importantly, Sheets, making it exceptionally well-suited for building comprehensive automations. This interconnectedness allows you to create workflows that span across multiple applications, streamlining processes and boosting productivity.

For web scraping specifically, Apps Script’s UrlFetchApp service allows you to fetch content directly from web pages, enabling automated data extraction and integration with the rest of your Google Workspace applications. By sending HTTP requests to websites, you can retrieve HTML, XML, or JSON data. This extracted data can then be parsed and structured using JavaScript within the script.

The true power of this approach is realized when combined with Google Sheets: scraped data can be directly written into spreadsheets, providing a convenient and familiar environment for data storage, organization, analysis, visualization, and further automation.

Step-by-Step Guide to Web Scraping with Google Apps Script and Google Sheets

The Idea

We’ll create a system that does the following:

- Reads a list of website addresses (URLs) from a Google Sheet.

- Visits each website and grabs its HTML content.

- Finds the predefined information (product price in our case) within that HTML.

- Writes the extracted prices back to the Google Sheet.

Setting up the Google Sheet

First, you need a Google Sheet.Your Google Sheet should have at least two columns:

- Column A (Column 1): This column will contain the website addresses (URLs) of the products you want to track.

- Column B (Column 2): This column will contain the CSS selector used to identify and extract the specific element (like the price) from the web page.

- Column C (Column 3): This column will store the prices extracted from the websites.

Here you can find a template for the spreadsheet.

Finding the Right Element: How to Get the CSS Selector

The next crucial step for your data extraction tool is telling it exactly which piece of information to grab from the webpage. This is where the CSS selector comes in.

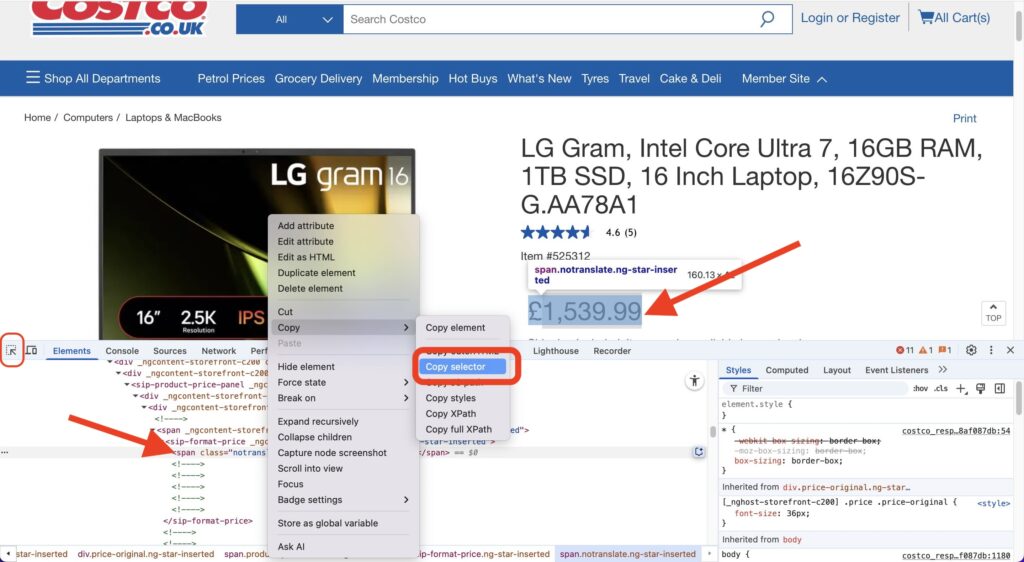

Think of a CSS selector as an address for a specific element on a web page – like the price, a product title, or a review count. Here’s how you can easily find this address using the Chrome browser:

- Open the Webpage: Go to the specific product page (or any page with the element you want to extract).

- Open Developer Tools: Right-click anywhere on the page and select “Inspect”. This opens the Developer Tools panel, usually at the bottom or side of your browser window. Make sure you’re on the “Elements” tab.

- Use the Element Picker: In the top-left corner of the Developer Tools panel, you’ll see a small icon that looks like an arrow inside a box (or sometimes just a cursor icon). Click on this icon. This activates the “select element” mode.

- Hover and Click: Now, move your cursor over the elements on the webpage. As you hover, the element under your cursor will be highlighted. Click on the specific element you want to extract (e.g., the price display).

- Locate in the HTML: Clicking the element picker will jump to and highlight that element’s code within the “Elements” tab of the Developer Tools.

- Copy the Selector: Right-click on the highlighted line of code in the Developer Tools panel. In the context menu that appears, hover over Copy and then click Copy selector.

That’s it! The CSS selector for that specific element is now copied to your clipboard. This is the value you would then paste into Column C of your Google Sheet for that product URL, telling your extraction script exactly what to look for on that page.

The Code (Google Apps Script)

Now, let’s look at the code that does the magic. Open the script editor in your Google Sheet by going to “Tools” > “Script editor”. Copy and paste the code provided below into the script editor.

/**

* Fetches product prices from URLs in a Google Sheet and updates the sheet with the prices.

*/

// Configuration (Update these with your actual values)

const SHEET_ID = `YOUR_SHEET_ID`; // Replace with your actual Sheet ID

const SHEET_NAME = `YOUR_SHEET_NAME`; // Replace with your actual Sheet Name

const URLS_COLUMN_ID = 1; // Column containing URLs (A = 1)

const CSS_SELECTOR_COLUMN = 2; // Column containing CSS selectors (B = 2)

const PRICE_COLUMN = 3; // Column to write prices (C = 3)

const REQUEST_DELAY = 1000; // Delay between requests in milliseconds (1 second)

/**

* Main function to run the script.

*/

function main() {

const urlData = getUrlsFromSheet();

if (urlData.length === 0) {

Logger.log("No URLs to process.");

return;

}

const prices = [];

for (let i = 0; i < urlData.length; i++) {

const url = urlData[i].url;

const selector = urlData[i].selector;

const html = fetchHtml(url);

const price = extractPrice(html, selector);

prices.push(price);

Utilities.sleep(REQUEST_DELAY); // Delay between requests

Logger.log(`Processed URL ${i+1}/${urlData.length}: ${url}, Price: ${price}`);

}

updateSheet(prices);

}

/**

* Opens the spreadsheet and gets the URLs and selectors.

* @return {Array<{url: string, selector: string}>} An array of objects containing URLs and selectors.

*/

function getUrlsFromSheet() {

const ss = SpreadsheetApp.openById(SHEET_ID);

const sheet = ss.getSheetByName(SHEET_NAME);

const lastRow = sheet.getLastRow();

if (lastRow < 2) return []; // Handle empty sheet

const urls = sheet.getRange(2, URLS_COLUMN_ID, lastRow - 1)

.getValues()

.flat(); // Get values as a 1D array.

const selectors = sheet.getRange(2, CSS_SELECTOR_COLUMN, lastRow - 1)

.getValues()

.flat(); // Get values as a 1D array.

const urlData = [];

for (let i = 0; i < urls.length; i++) {

urlData.push({

url: urls[i],

selector: selectors[i]

});

}

return urlData;

}

/**

* Fetches the HTML content of a URL.

* @param {string} url The URL to fetch.

* @return {string|null} The HTML content, or null if there's an error.

*/

function fetchHtml(url) {

try {

const options = {

method: 'get',

muteHttpExceptions: true,

headers: { // Added some common headers

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36',

'Accept-Language': 'en-US,en;q=0.9',

'Cache-Control': 'no-cache'

}

};

const response = UrlFetchApp.fetch(url, options);

if (response.getResponseCode() === 200) {

return response.getContentText();

} else {

Logger.log(`Error fetching ${url}: ${response.getResponseCode()} - ${response.getContentText()}`);

return null;

}

} catch (error) {

Logger.log(`Error fetching ${url}: ${error}`);

return null;

}

}

/**

* Extracts the price from the HTML using Cheerio.

* @param {string} html The HTML content.

* @param {string} selector The CSS selector to use.

* @return {number|null} The extracted price as a number, or null if not found.

*/

function extractPrice(html, selector) { // Added selector parameter

if (!html || !selector) return null; // Added check for selector

try {

const $ = Cheerio.load(html);

let priceText = $(selector).text(); // Use provided selector

if (!priceText) {

Logger.log("Price element not found using selector: " + selector);

return null;

}

priceText = priceText.replace(/,/g,'.');

let price = parseFloat(priceText.replace(/[^0-9.]/g, ''));

if (isNaN(price)) {

Logger.log("Could not parse price to a number: " + priceText);

return null;

}

return price;

} catch (error) {

Logger.log("Error extracting price: " + error);

return null;

}

}

/**

* Updates the Google Sheet with the extracted prices.

* @param {Array<number|null>} prices An array of prices to write to the sheet.

*/

function updateSheet(prices) {

if (prices.length === 0) return;

const ss = SpreadsheetApp.openById(SHEET_ID);

const sheet = ss.getSheetByName(SHEET_NAME);

const lastRow = sheet.getLastRow();

const urlsCount = sheet.getRange(2, URLS_COLUMN_ID, lastRow - 1).getValues().flat().length;

if (prices.length !== urlsCount) {

Logger.log(`Number of prices (${prices.length}) does not match the number of URLs (${urlsCount}).`);

return;

}

sheet.getRange(2, PRICE_COLUMN, prices.length, 1).setValues(prices.map(price => [price]));

}Before you start using this code, please don’t forget to include the Cheerio library. This third-party library helps us process HTML more easily and must be added to our project manually. To do this:

- In the Apps Script editor, click on the “Resources” menu and select “Libraries…”

- A dialog box will appear. Enter the following Script ID into the input field:

1ReeQ6WO8kKNxoaA_O0XEQ589cIrRvEBA9qcWpNqdOP17i47u6N9M5Xh0 - After entering the Script ID, click the “Look up” button.

- If the Script ID is valid, the library’s information (name and versions) will appear. Choose the specific version of the library you want to use from the dropdown menu. It’s generally recommended to use a specific version rather than “latest” for stability.

- Once you’ve selected the version, click the “Add” button.

The library should now appear in the “Libraries” dialog box.

Explanation of the Code

Our Google Apps Script for tracking product prices is built from several key functions working together. Let’s break down what each one does:

1. main()

- Purpose: This is the conductor of our orchestra! The

mainfunction is the primary entry point that orchestrates the entire process of fetching and updating prices. It calls other functions in the correct sequence to get the job done. - Structure:

- It starts by calling

getUrlsFromSheet()to fetch the list of URLs and their corresponding CSS selectors from your spreadsheet. - It then loops through each URL/selector pair.

- Inside the loop, for each item:

- It calls

WorkspaceHtml()to download the webpage’s content. - It passes the downloaded HTML and the selector to

extractPrice()to find the price. - It adds the found price (or null if not found) to a list.

- It pauses briefly (

Utilities.sleep()) to avoid overloading the websites.

- It calls

- Finally, it calls

updateSheet()to write all the collected prices back to the spreadsheet.

- It starts by calling

- Analogy: Think of

main()as a Project Manager. It doesn’t do the specialized tasks itself, but it knows the overall goal and directs the specialists (getUrlsFromSheet,WorkspaceHtml,extractPrice,updateSheet) in the right order, ensuring the project (price tracking) is completed step-by-step.

2. getUrlsFromSheet()

- Purpose: This function acts as the data retriever. Its job is to open your specified Google Sheet and read the list of product page URLs and the CSS selectors needed to find the prices on those pages.

- Structure:

- Opens the Google Spreadsheet using its ID (

SHEET_ID). - Selects the specific sheet within that spreadsheet using its name (

SHEET_NAME). - Determines how many rows have data.

- Reads the values from the URL column (

URLS_COLUMN_ID). - Reads the values from the CSS Selector column (

CSS_SELECTOR_COLUMN). - Combines these into a list where each item contains both the URL and its corresponding selector.

- Returns this list of URL/selector pairs.

- Opens the Google Spreadsheet using its ID (

- Analogy: This function is like a Librarian. You give it the location (Sheet ID and Name) and the type of information you need (URLs and Selectors from specific columns), and it goes to the shelves (the Sheet), pulls out the requested lists, and hands them over neatly organized.

3. WorkspaceHtml()

- Purpose: This function’s role is to visit a specific web address (URL) and download the raw HTML source code of that page. This code is what the browser uses to display the webpage, and it contains the price information we need.

- Structure:

- Takes a single

urlas input. - Uses Google Apps Script’s

UrlFetchApp.fetch()service to make a request to that URL. - It includes specific

options(likeUser-Agentheaders) to make the request look more like it’s coming from a standard web browser, which can help prevent being blocked by some websites. - It checks if the website responded successfully (HTTP status code 200).

- If successful, it returns the downloaded HTML code as text.

- If there’s an error (like the page not found or access denied), it logs the error and returns

null.

- Takes a single

- Analogy: Imagine

WorkspaceHtml()as a Web Scout. You give it an address (URL), and it goes to that location on the internet, makes a copy of the page’s underlying blueprint (the HTML), and brings that blueprint back to you. It even tries to look like a regular visitor to avoid suspicion.

4. extractPrice()

- Purpose: This is the specialized extractor. Given the raw HTML code of a page and a specific CSS selector (which acts like a map coordinate), this function finds the price text, cleans it up, and converts it into a numerical value.

- Structure:

- Takes the

htmlcontent and theselectoras input. - Uses the

Cheeriolibrary (which needs to be added to your Apps Script project) to parse the HTML, making it easy to search. - Uses the provided

selectorto pinpoint the exact HTML element containing the price. - Extracts the text content from that element.

- Cleans the text: removes currency symbols, thousands separators (like commas), and any other non-numeric characters except the decimal point. It also standardizes decimal separators to periods.

- Converts the cleaned text into a floating-point number.

- If any step fails (e.g., selector not found, text can’t be converted to a number), it logs the issue and returns

null. Otherwise, it returns the numerical price.

- Takes the

- Analogy: Think of

extractPrice()as a Data Detective. It receives a large document (HTML) and a specific clue (the CSS selector). It uses the clue to find the exact piece of data (the price text), carefully cleans off any obscuring marks (currency symbols, commas), and reports the final numerical value found.

5. updateSheet()

- Purpose: This function is the final step, responsible for taking the list of extracted prices and writing them back into the designated column in your Google Sheet.

- Structure:

- Takes the

pricesarray (collected by themainfunction) as input. - Opens the target Google Spreadsheet and Sheet (similar to

getUrlsFromSheet). - Performs a safety check: It verifies that the number of prices collected matches the number of URLs originally read from the sheet to avoid writing data in the wrong rows if some fetches failed.

- Selects the correct range in the output column (

PRICE_COLUMN), starting from the second row and spanning the necessary number of rows. - Formats the simple list of prices into a 2D array format that Google Sheets requires for writing (

[[price1], [price2], ...]). - Writes the formatted prices into the selected range in the sheet using

setValues().

- Takes the

- Analogy: This function is the Accountant or Scribe. It takes the final report (the list of prices) generated by the team and carefully enters each value into the correct cell in the ledger (the Google Sheet), ensuring accuracy and proper placement.

Running the Code

After pasting the code into the script editor:

- Save the script.

- Run the

main()function. You’ll need to authorize the script to access your Google Sheet.

Important Notes:

- The

extractPrice(html)function is the most website-specific part. The way prices are displayed in HTML varies from website to website. Therefore, you might need to adjust the code within this function to match the structure of the websites you’re tracking. You’ll likely need to inspect the website’s HTML source to find a suitable CSS selector. - The code includes a 1-second delay between requests. This is important to be polite to the websites you’re scraping and avoid getting blocked.

This automated system will save you time and effort by automatically tracking product prices for you. By understanding the basics of how it works, you can even customize it further to suit your specific needs.

Scheduling the Script to Run Daily

The last step is to schedule your script to run automatically every day at the desired time.

Create a Time-Based Trigger

- Go to “Edit” -> “Current project’s triggers”.

- Click “Add Trigger”.

- Select the

main()function. - Choose “Time-driven” as the event source.

- Select “Day timer” and specify the desired time (e.g., 00:00 for midnight).

- Save the trigger.

Tips and Limitations

While Google Apps Script’s UrlFetchApp is effective for many websites, it may not be suitable for heavily protected sites that employ anti-scraping measures such as CAPTCHAs and IP blocking. Attempting to bypass these protections with Apps Script alone is often unreliable.

Scraping such portals or engaging in “black hat” practices requires dedicated third-party solutions that use techniques like rotating proxies and CAPTCHA solvers. Examples of such services include Apify, Bright Data, ParseHub, and Scraper API.

Importantly, these services can often be integrated into Google Workspace automation processes. This means you can still build a complete, automated workflow within your Workspace infrastructure, even if a third-party scraping solution is necessary. Data extracted by these services can be seamlessly fed into Google Sheets, Docs, or other Workspace apps using their APIs or webhooks. It is highly recommended to respect website terms of service and `robots.txt` and to scrape responsibly and ethically.

UrlFetchApp Quotas

Google Workspace imposes quotas and limitations on `UrlFetchApp` to prevent abuse, maintain service stability, and ensure fair usage across all users. These limitations are crucial to understand when designing web scraping scripts:

- Execution Time: There’s a daily limit on the total time your Apps Script project can run. As of this writing, this limit is typically around 6 minutes per execution for most Workspace accounts. This means if your script runs for an extended period due to numerous

UrlFetchAppcalls or complex processing, it might reach this limit and stop executing. However, you can often restart the script after it has stopped due to hitting the execution time limit, allowing you to process larger datasets or perform more complex tasks in stages. - Number of Calls: There are restrictions on how many

UrlFetchApprequests you can make within specific timeframes. These limits are designed to prevent overwhelming target websites with requests. As of this writing, free Google accounts typically have a daily limit of around 20,000 `UrlFetchApp` calls, while Google Workspace accounts generally have a much higher limit, around 100,000 calls per day. - Sequential Approach vs. `fetchAll()`: Although Apps Script operates in a single-threaded environment and doesn’t offer true simultaneous fetching, using

UrlFetchApp.fetchAll()allows for optimized retrieval of multiple URLs. This method significantly reduces overhead compared to sequential fetching by handling network communication more efficiently, even though the requests are not executed in parallel.

Conclusion

In summary, web scraping with Google Apps Script and Google Sheets offers a powerful, accessible, and importantly, free way for businesses to automate data collection and gain valuable insights. Google Apps Script is available for use with both free Google accounts and Google Workspace subscriptions, making it a cost-effective solution for businesses of all sizes.

Furthermore, if you’re using Google Workspace, relying on Apps Script for your web scraping needs offers unparalleled flexibility and integration within your existing business workflows. Unlike rigid third-party solutions that often require complex integrations and data transfer processes, Apps Script seamlessly connects with other Workspace apps.

We encourage you to explore the possibilities of web scraping with Google Apps Script and Sheets for your own business needs and unlock the potential of automated data collection for improved efficiency and strategic advantage. The number of scenarios where this powerful combination can be applied is virtually endless; from automating market research and competitor analysis to streamlining lead generation and content aggregation, only your imagination limits the potential use cases.

About the Author

Attila

I am a Senior Data Analyst and Automation Specialist with 15+ years of experience building practical solutions on Google Workspace to supercharge your productivity. Let me transform your raw data into a decisive competitive advantage and automate your workflows, all within the platform your team already knows.